Architecting Computer Vision Platforms: Then vs. Now

Architecting Computer Vision Platforms: Then (Blue) vs. Now (Red)

Why Vision-Language Models Unlock a New Class of Real-World Intelligence

For more than a decade, computer vision has promised to help machines understand the physical world. Early platforms delivered impressive demonstrations—object detection, bounding boxes, classification—but struggled to translate those capabilities into durable, scalable systems that could operate reliably in complex, real-world environments.

The gap was not one of accuracy alone. It was architectural.

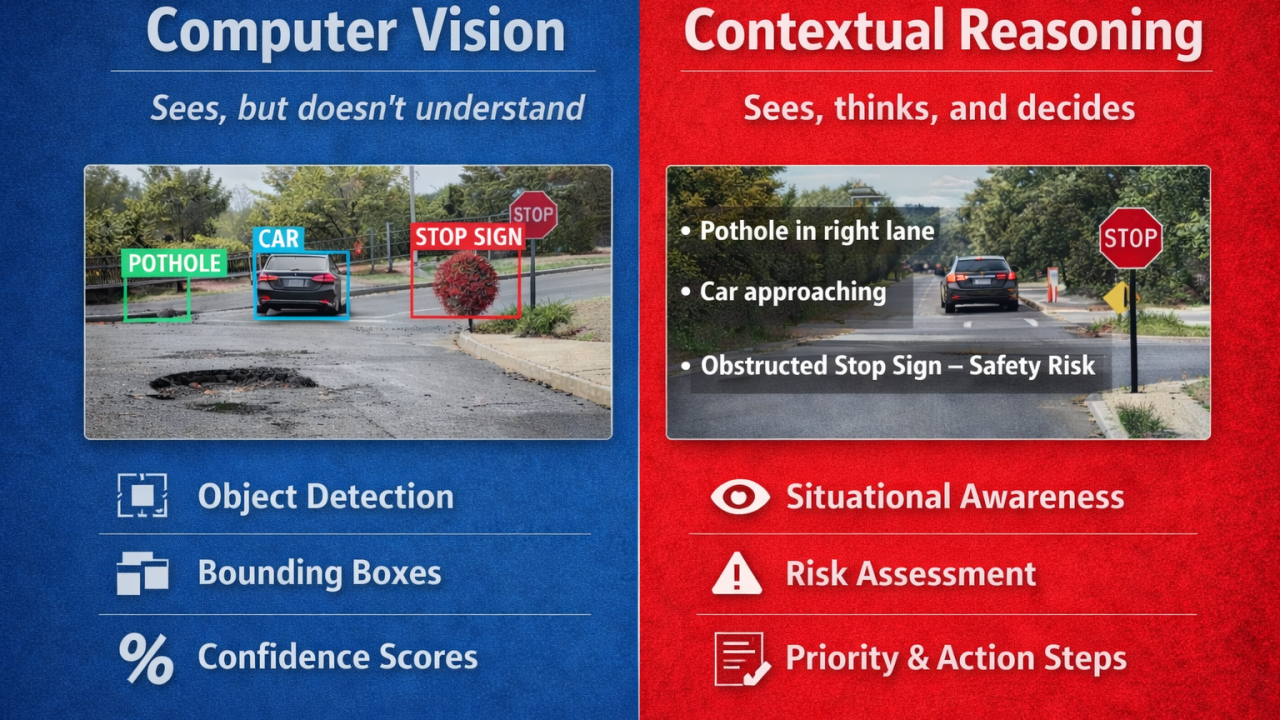

Most computer vision systems were designed to see, not to understand, and certainly not to act. As a result, they remained powerful perception engines—useful, but limited—unable to close the loop between observation, decision-making, and real-world outcomes.

Today, that gap is finally closing. Advances in Vision-Language Models (VLMs), combined with edge computing and multimodal reasoning, have fundamentally changed how computer vision platforms can be architected, deployed, and scaled. This shift enables a new generation of systems designed not just to detect what is visible, but to reason about what it means, why it matters, and what should happen next.

The Past: Computer Vision as Perception Infrastructure

Traditional computer vision platforms were built around a narrow and fragmented model architecture. Each use case required its own specialized pipeline:

· One model for potholes

· Another for signage

· Another for curb ramps

Every new capability meant new data collection, manual labeling, retraining, tuning, and validation. As scope expanded, systems became increasingly brittle, expensive to maintain, and difficult to scale across environments.

More importantly, these platforms produced pixel-level outputs without context. Bounding boxes, segmentation masks, and confidence scores answered a basic question—what is visible in this frame?—but failed to address the questions operators actually care about:

· Why does this matter?

· Does it violate a policy or standard?

· How severe is the issue?

· What action should be taken?

Because the system could not reason, humans had to interpret. Automation stalled, operational impact was limited, and ROI remained constrained.

Architecturally, most platforms were also cloud-first and batch-oriented. They assumed static cameras, fixed viewpoints, reliable connectivity, and delayed analysis. While effective in controlled environments, this approach broke down in dynamic, mobile settings where latency, bandwidth, and coverage density are critical.

Finally, legacy platforms stopped at detection. They flagged issues, generated alerts, and filled dashboards—but they did not track persistence, degradation, resolution, or verification. Without closure, they were poorly suited for operational workflows, compliance, or accountability.

"Most computer vision platforms stop at seeing. Physical Al starts when systems understand context, act in the real world, and verify outcomes.” - Chris Carson, Founder & Global CEO

The Present: From Computer Vision to Intelligence Systems

Modern computer vision platforms are no longer built as collections of isolated models. They are increasingly designed as intelligence systems, centered on context, reasoning, and outcomes.

At the heart of this transition are Vision-Language Models.

VLMs fundamentally change what computer vision systems can do. Rather than simply identifying objects, they interpret scenes. Instead of stating, “There is a stop sign,” a VLM can reason, “This stop sign is partially obscured by vegetation, reducing visibility and likely violating maintenance standards.”

This shift enables semantic understanding, policy-aware classification, and human-readable explanations—capabilities that were largely absent from earlier platforms.

Just as importantly, VLMs reduce fragmentation. A single foundation model can recognize thousands of asset types, adapt to new categories with minimal retraining, and generalize across cities and environments. This eliminates the need to maintain dozens of narrow, task-specific models.

Modern architectures are also multimodal by design, fusing vision with language, time, location, and operational metadata. This allows systems to reason not just about what is visible, but where, when, and in what context it matters.

Advances in edge computing complete the picture. Real-time, on-device inference enables low-latency decisioning, reduced bandwidth costs, and continuous observation at scale—especially critical for mobile platforms operating across large, dynamic environments.

The Line Between Computer Vision and Physical AI

Despite these advances, it is important to draw a clear distinction: computer vision platforms are not inherently Physical AI (If you’re calling it Physical AI now, you might want to read this twice.)

Most remain visual analytics systems. They observe the physical world, but do not meaningfully operate within it.

Physical AI requires more than perception. A system crosses that threshold when it:

1. Is embodied in the physical world (vehicles, machines, infrastructure)

2. Perceives continuously, not episodically

3. Reasons in context, incorporating time, location, policy, and operational state

4. Drives or validates real-world action

5. Closes the loop from observation to verified outcome

In short, Physical AI is defined by agency and embodiment, not perception alone.

I’ve built computer vision platforms in the past, and most were optimized for detection—powerful, but brittle and narrow in scope. They could see, but they could not operate.

EchoTwin AI: Architected for Physical AI

EchoTwin AI represents what happens when a platform is designed from the ground up for this new paradigm.

Rather than retrofitting legacy computer vision architectures, EchoTwin was built as a Physical AI system—one that combines perception, reasoning, and action within real-world operations.

At the core of the platform is EchoTwin’s patented Vision Language Model Framework (VLMF) and proprietary foundational models. Unlike traditional pipelines that rely on rigid taxonomies and narrowly trained detectors, the VLMF enables semantic interpretation of the physical world—understanding not just what is present, but why it matters in context. Visual observations are translated into structured, policy-aware, human-readable intelligence aligned with real operational and regulatory needs.

Crucially, EchoTwin’s AI is embodied. Its models run on edge devices embedded across active municipal fleets—buses, street sweepers, garbage trucks, and inspection vehicles—turning everyday operations into continuous sensing and intelligence-gathering workflows. This embodiment delivers persistent, city-scale coverage that static cameras and manual inspections cannot match.

Beyond perception, EchoTwin’s architecture treats time as a first-class concept. Observations are evaluated across repeated passes, enabling the system to track degradation, persistence, and recurrence. This temporal reasoning supports metrics such as time-to-awareness and time-to-resolution, allowing cities to shift from reactive response to proactive management.

Most importantly, EchoTwin closes the loop. Intelligence flows directly into operational workflows—prioritizing issues, supporting action, and verifying resolution through re-observation and evidence capture. Every issue can be traced from first sighting through confirmed closure, creating a defensible, evidence-grade record of conditions, decisions, and outcomes.

The Result: A Step-Change in What Computer Vision Can Deliver

By combining Vision-Language Models, edge-native deployment, temporal reasoning, and operational integration, EchoTwin moves computer vision from a supporting analytic tool to a system of record for real-world intelligence.

This is the difference between:

· Seeing the world

· And understanding, managing, and improving it at scale

EchoTwin Didn’t Just Adopt Physical AI for Cities—We Defined it.

As cities, infrastructure operators, and enterprises increasingly demand systems that are explainable, defensible, and outcome-driven, the era of narrow, detection-only computer vision platforms is coming to an end.

The future belongs to context-aware, reasoning-based Physical AI systems—and that future is already being built.