From Seeing to Reasoning: How EchoTwin AI Builds Proprietary Vision-Language Models for Physical AI in Cities

Vision-Language Models (VLMs) have made rapid progress over the last few years. Modern models can identify objects, read text in images, and describe scenes with impressive accuracy. Yet most still fall short where real-world operations demand more: reasoning over time, understanding physical context, handling uncertainty, and driving action in complex environments.

Recent advances such as NVIDIA’s Cosmos Reason 2 highlight an important inflection point for Physical AI. These models move beyond perception toward spatio-temporal reasoning, enabling AI systems to see, understand, plan, and act in the physical world—step by step, with common sense and awareness of physics.

At EchoTwin, we view this shift as foundational—but not sufficient on its own. Cities are not robots, and streets are not controlled lab environments. Building Physical AI for cities requires domain-specific reasoning models, deeply aligned with policy, infrastructure, operations, and accountability. That is why EchoTwin is developing proprietary Vision-Language Models purpose-built for urban systems.

“The leap from seeing to reasoning is the difference between AI that observes cities and AI that actually runs them. Vision-Language Models are powerful, but without domain-specific reasoning, policy awareness, and closed-loop accountability, they stop at insight. At EchoTwin, we’re building Physical AI that understands cities well enough to act responsibly in the real world—and prove that it worked.” — Chris Carson, Founder & Global CEO, EchoTwin AI

Why Generic VLMs Are Not Enough for Cities

General-purpose reasoning VLMs excel at:

• Object recognition and localization

• Video understanding and captioning

• Trajectory prediction and planning

• Long-context, multi-step reasoning

But urban operations introduce constraints that generic models do not natively solve:

• Policy awareness (local codes, SLAs, enforcement rules)

• Operational ownership (who fixes what, when, and how)

• Verification requirements (proof that an issue was resolved)

• Edge deployment constraints (cost, latency, power, privacy)

• Auditability (evidence chains, timestamps, compliance)

EchoTwin’s VLMs are not designed to “describe the city.” They are designed to run the city.

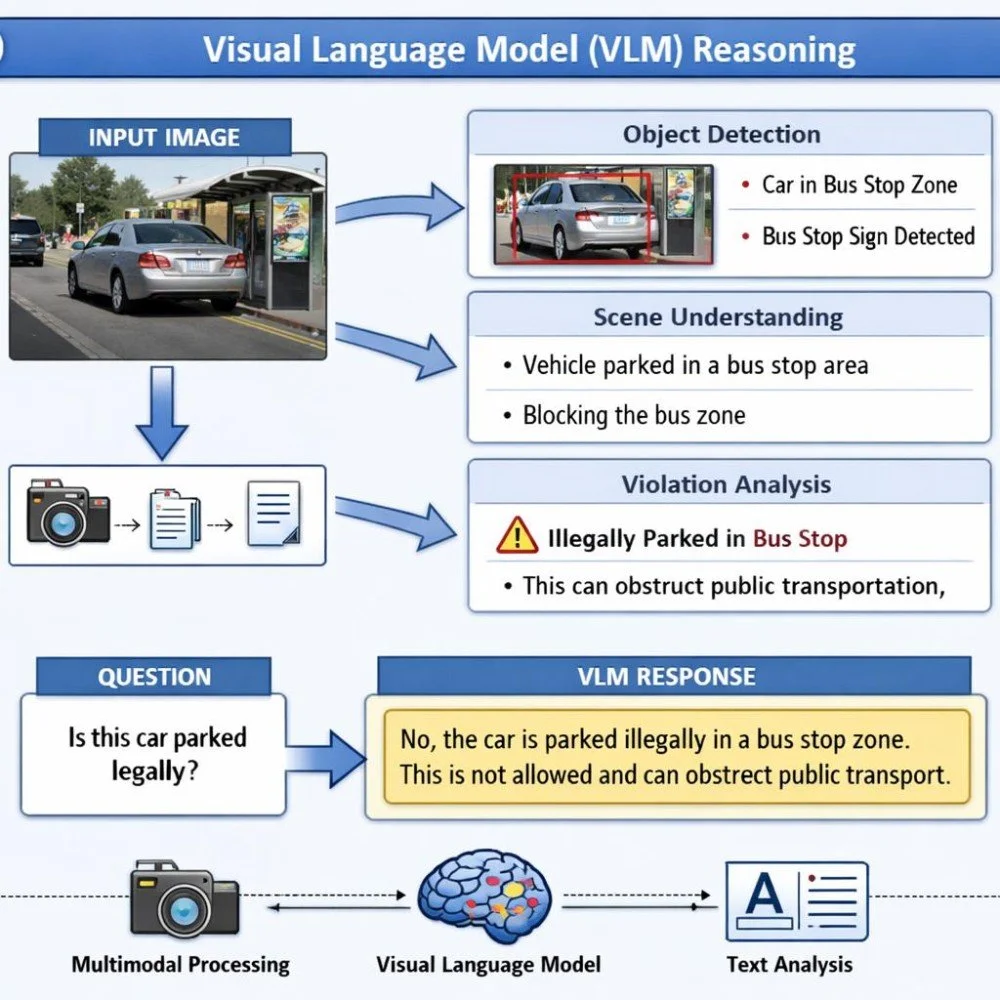

EchoTwin’s proprietary VLMs are built around a Detect → Understand → Act → Verify loop.

1) Spatio-Temporal Urban Understanding

Our models reason over space and time, not just frames:

• Persistent tracking of assets across routes and days

• Precise localization (lane-level, curb-level, sign-level)

• Change detection vs. anomaly detection

• Temporal attribution (when did this degrade, worsen, or resolve?)

This enables infrastructure intelligence such as:

• Sand accumulation trends

• Progressive road surface degradation

• Vegetation growth affecting visibility

• Recurrent illegal dumping hotspots

2) Contextual & Policy-Aware Reasoning

Unlike generic VLMs, EchoTwin models embed:

• Local regulations and service standards

• Asset ownership logic (DOT vs Public Works vs contractor)

• Severity and prioritization rules

• Enforcement vs maintenance differentiation

The model does not just “see a dirty sign” or a “bus stop violation” —it understands whether that condition is actionable, non-compliant, urgent, or informational.

3) Action Routing, Not Just Insight

EchoTwin VLMs are integrated directly into municipal workflows:

• Automatic work order generation

• Evidence packaging for enforcement or compliance

• Priority queues for operators

• API-level integration into city systems

This is where Physical AI becomes operational—not another dashboard.

4) Closed-Loop Verification

A defining difference from most vision systems:

• The model re-checks locations after action

• Confirms remediation

• Quantifies time-to-resolution

• Produces auditable KPIs

Cities move from reactive inspection to self-healing operations.

Domain-Specific Reasoning Models for Parking Compliance can improve Policy-Aware Enforcement at the Curb

Parking and curb compliance is one of the most operationally complex and politically sensitive challenges cities face. The curb is dynamic: permitted uses change by time of day, day of week, vehicle type, and location-specific rules—and violations are often contested without clear, defensible evidence.

EchoTwin’s proprietary VLMs extend beyond “license plate capture” into curbside understanding and policy-aware decisioning, enabling cities to:

• Detect: Identify stopped/parked vehicles, occupancy patterns, double-parking, blocking of bus lanes or curb zones, and obstruction of access points.

• Understand: Interpret context—signage, curb markings, geofenced rules, allowable dwell time, and special cases (loading, ADA, emergency, municipal vehicles).

• Act: Route the event through compliant workflows—creating evidence packages, officer review queues, or automated referrals based on local program design.

• Verify: Re-scan the location to confirm clearance or remediation, closing the loop and producing measurable outcomes.

This approach turns curb compliance from periodic enforcement into a continuous, auditable, and scalable operational capability, aligned with modern city goals: reliable transit, safer streets, and efficient curb utilization.